Australia’s laid back iconic attitude of ‘if it isn’t broken- don’t fix it” is often admirable; we are a nation that ‘goes with the flow’, rolls up our sleeves and get ‘stuck in’ following natural disasters like floods. Community response around Lismore these past few weeks is certainly testimony to this attitude, with every shop owner and household cleaning up, and removing the piles of flood-damaged items that now line the streets as a reminder of the extent of personal loss. But as one coffee shop owner shared with me, this flood has created a sense of trauma, fear and deep uncertainty about the future safety of the town.

Investigating ‘big floods’:

As someone who has investigated ‘big floods’, I know that the way we currently predict floods is out of date, yet there seems to be an inbuilt inertia in some institutions to change. The question myself and my team are asking is:

What if it is broken and we don’t fix it? What if how we are predicting flood risk isn’t the best we can do?

My name is Dr Jacky Croke and I have been part of a team that has spent the last five years working to improve flood frequency analysis to better prepare for the sort of extreme flood events we witnessed in 2011 in the Lockyer Valley and, again recently in the wake of cyclone Debbie (www.thebigflood.com.au).

Our work has highlighted three key problems with predicting extreme floods in Australia.

- The current length of river gauging data used to predict the frequency of these extreme events is on average less than 40 years. This means we are often using data from as recently as the 1970’s to predict the timing of events which occur maybe every 100 or even 1000 of years. You don’t need to be a statistician to see the fundamental problem here.

- Some of those 40 year records do not contain even one flood of similar magnitude of those we have witnessed recently; while in other areas, the record can contain 3 or more in the space of < 5 years-. You will get a vastly different prediction based on either scenario.

- All our efforts are being directed into improving the statistical modelling of this 40 years of data- but no matter how well we ‘model’ those 40 years of data, it simply isn’t a long enough window in time to capture the known variability of flood and drought.

So why are we continuing to predict floods the same way? Every engineer and flood manager in the country knows these problems exist. However, we have seen no progress in the uptake, or even exploration of complementary approaches for improved flood frequency analysis.

Paleoflood hydrology:

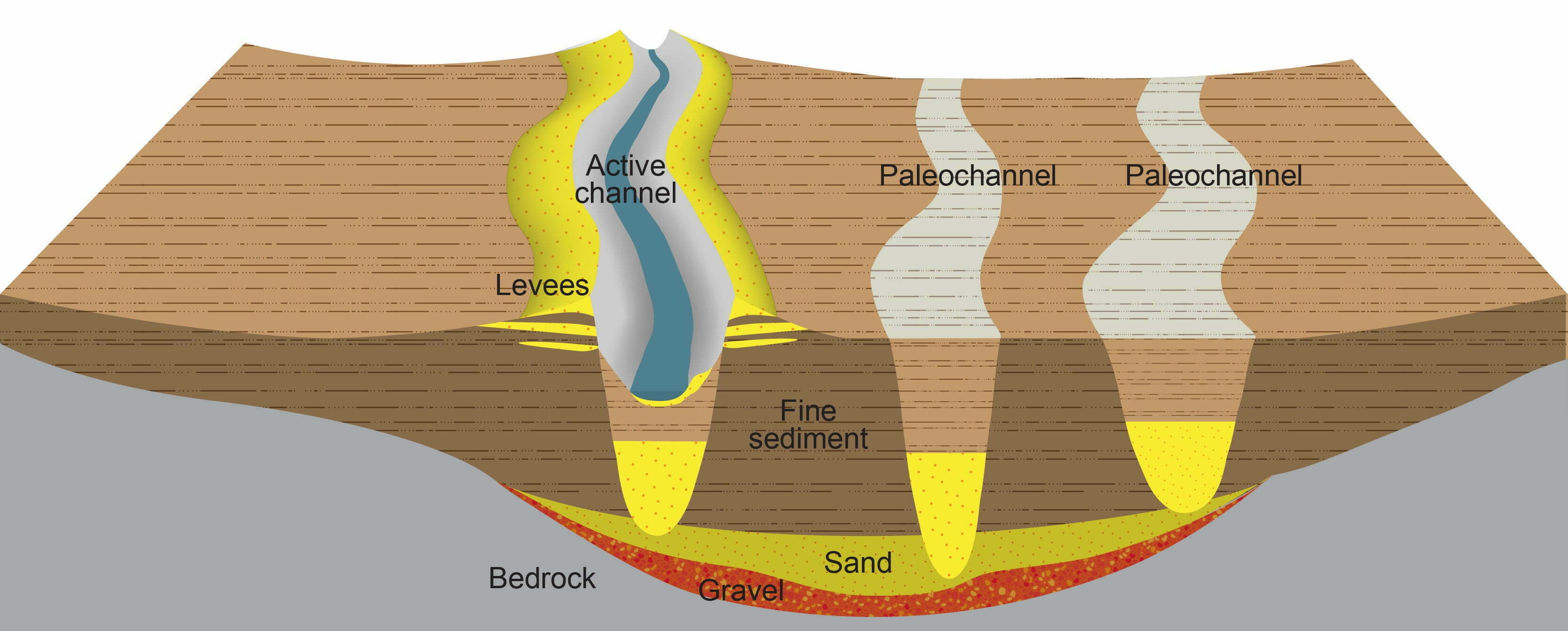

Palaeoflood hydrology is a science of reconstructing previous large floods which occurred prior to human observations and systematic measurements. Records of these past floods help improve our understanding of their frequency and magnitudes, and therefore improve flood forecasting and mitigation. As a discipline, it was established over 50 years ago, and in many countries is now widely used as a complementary approach to improving flood prediction. Figure 1 shows where flood deposits are stored after each flood event in the adjacent floodplain and old channels (palaeochannels). We can access a vast record of past flood history by examining the nature and timing of these deposits in the landscape.

Over the past decade alone, countries like China, Europe and the USA have used palaeoflood hydrology to reduce uncertainty on flood magnitude predictions for dam design. Australia has made no progress in the number of palaeoflood studies since the 1980’s, and our palaeoflood database is extremely limited geographically.

Paleoflood hydrology applied in Australia:

Recent findings using dated palaeoflood slack water deposits from three major basins in south east Queensland demonstrates that using paleoflood hydrology it is the single most effective contribution we can make to improved flood predictions and flood risk management in Australia. Extending the flood record back decades, or in some cases millennia, instantly provides the longer time window needed for more robust statistical analyses of estimates of flood frequency.

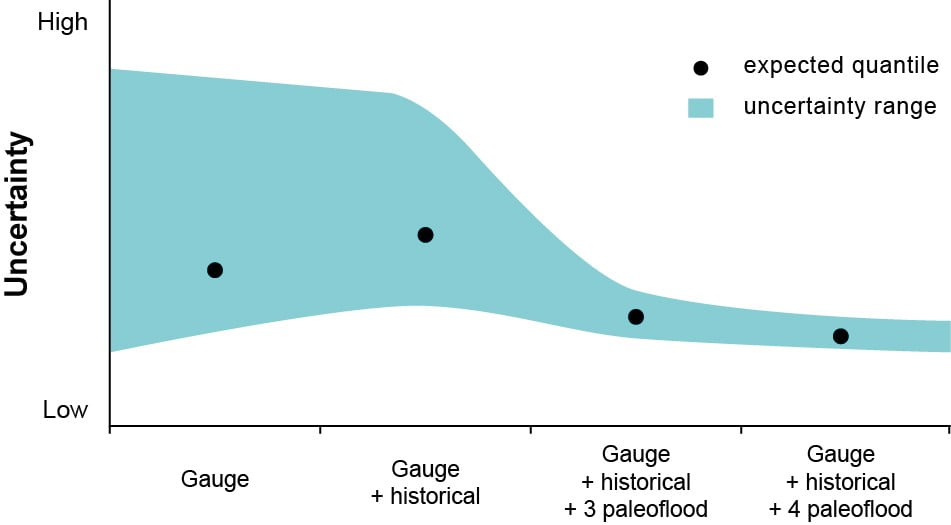

The effect this has on reducing uncertainty is massive. For example, current predictions of a 1-in-100-year event are usually illustrated using a single discharge value- such as 10,000m3. The confidence limits on this prediction may extend this estimate to between 1000-100000m3.

This means that every time we ‘engineer’ a road culvert or bridge structure to the design standards of a 1-in 100 year flood, we never see or address the uncertainties around that prediction. To be within the 90% confidence limits of prediction, the selected culvert or bridge size may need to be one order magnitude smaller or bigger!

The Figure 2 below illustrates the predicted flood estimate and the associated uncertainty around that estimate shaded in blue. We can see that the uncertainty range is quite large when we use just gauging data, and this is only slightly reduced with adding in additional historical data. The big difference in uncertainty range comes when we incorporate palaeoflood data into this estimate. The uncertainty estimate can be reduced from between 45-75%. What this means is we are a lot more confident of the estimate.

Reducing uncertainty:

Palaeoflood hydrology allows us to reduce that uncertainty by at least 50%. What does this mean? It means that rather than assuming that the most probable prediction affords reasonable safety, we can narrow that uncertainty to something we should all feel more comfortable with.

So the question is why is Australia lagging behind the rest of the world on incorporating this approach into improved flood frequency predictions?

We don’t know the answer to that; maybe it’s a lack of awareness or the assumption that the existing approach isn’t in fact ‘broken’. Our team believe it is broken and there is a need for change. As more and more Australians’ are experiencing the direct cost of these uncertainties, we need to work together with local councils and governments to use approaches we know can reduce uncertainty and the negative impacts of flooding on local communities.

Fixing it doesn’t involve throwing the baby out with the proverbial bath water, it involves loosening the grip on existing engineering design standards for flood prediction and being open to the possibility that what we currently use isn’t good enough. Most importantly, it involves working together, sharing knowledge and considering new approaches.

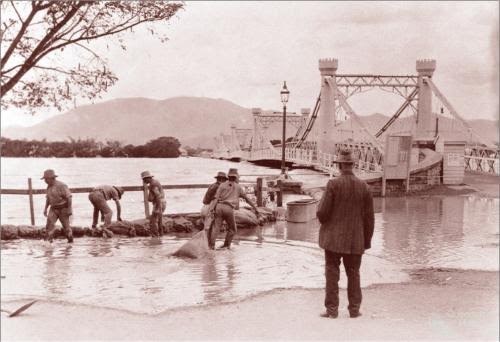

By looking to the past we can inform the future. Flooding in Rockhampton 1920s.

Spreading the word:

As the Big Flood project has now officially wrapped up, we welcome opportunities to keep spreading the word on possible improvements to flood risk management in Australia. I will be presenting a keynote at the forthcoming Floodplain Management Australia National Conference ‘Preparing for the next big flood’ being held in Newcastle between May 16-19thwhich will be attended by a diverse audience of engineers and personnel from local council and emergency services.

Brisbane floods, large scale, high intensity, high cost.

In light of recent events in the Northern Rivers region, key findings from the Big Flood project will also be presented in Bangalow on May 23rd as part of the River Basin Management Society’s inaugural Northern Rivers branch. Event details can be found here. We welcome anyone interested in the topic of floods and flood management to come along for a chat.

You can also check out the Big Flood website that has lots of great information, infographics explaining paleoflood hydrology and free resources.

Jacky can be contacted on jacky@catchmentconnections.com.au